Meteorology

2007 Schools Wikipedia Selection. Related subjects: Climate and the Weather

| Atmospheric sciences [cat.] |

|---|

Meteorology [cat.]

|

Climatology [cat.]

|

|

Meteorology is the interdisciplinary scientific study of the atmosphere that focuses on weather processes and forecasting. Meteorological phenomena are observable weather events which illuminate and are explained by the science of meteorology. Those events are bound by the variables that exist in Earth's atmosphere. They are temperature, pressure, water vapor, and the gradients and interactions of each variable, and how they change in time. The majority of Earth's observed weather is located in the troposphere.

Meteorology, climatology, atmospheric physics, and atmospheric chemistry are sub-disciplines of the atmospheric sciences. Meteorology and hydrology comprise the interdiscplinary field of hydrometeorology.

Interactions between our atmosphere and the oceans are part of coupled ocean-atmosphere studies. Meteorology has application in many diverse fields such as the military, energy production, farming, shipping and construction.

History of meteorology

Early achievements in meteorology

- 350 BC

The term meteorology comes from Aristotle's Meteorology.

Although the term meteorology is used today to describe a subdiscipline of the atmospheric sciences, Aristotle's work is more general. The work touches upon much of what is known as the earth sciences. In his own words:

...all the affections we may call common to air and water, and the kinds and parts of the earth and the affections of its parts.

One of the most impressive achievements in Meteorology is his description of what is now known as the hydrologic cycle:

Now the sun, moving as it does, sets up processes of change and becoming and decay, and by its agency the finest and sweetest water is every day carried up and is dissolved into vapour and rises to the upper region, where it is condensed again by the cold and so returns to the earth.

- 1607

Galileo Galilei constructs a thermoscope. Not only did this device measure temperature, but it represented a paradigm shift. Up to this point, heat and cold were believed to be qualities of Aristotle's elements (fire, water, air, and earth). Note: There is some controversy about who actually built this first thermoscope. There is some evidence for this device being independently built at several different times. This is the era of the first recorded meteorological observations. As there was no standard measurement, they were of little use until the work of Daniel Gabriel Fahrenheit and Anders Celsius in the 18th century.

- 1643

Evangelista Torricelli, a contemporary and one-time assistant of Galileo, creates the first man-made sustained vacuum in 1643, and in the process creates the first barometer. Changes in height of mercury in this Toricelli Tube lead to his discovery that atmospheric pressure changes over time.

- 1648

Blaise Pascal discovers that atmospheric pressure decreases with height, and deduces that there is a vacuum above the atmosphere.

- 1667

Robert Hooke builds an anemometer to measure windspeed.

- 1686

Edmund Halley maps the trade winds, deduces that atmospheric changes are driven by solar heat, and confirms the discoveries of Pascal about atmospheric pressure.

- 1735

George Hadley is the first to take the rotation of the Earth into account to explain the behaviour of the trade winds. Although the mechanism Hadley described was incorrect, predicting trade winds half as strong as the actual winds, the circulating cells that Hadley described later become known as Hadley cells.

- 1743- 1784

Benjamin Franklin observes that weather systems in North America move from west to east, demonstrates that lightning is electricity, publishes the first scientific chart of the Gulf Stream, links a volcanic eruption to weather, and speculates about the effect of deforestation on climate.

- 1780

Horace de Saussure constructs a hair hygrometer to measure humidity.

- 1802- 1803

Luke Howard writes On the Modification of Clouds in which he assigns cloud types Latin names.

- 1806

Francis Beaufort introduces his system for classifying wind speeds.

- 1838

Controversial Law of Storms work by William Reid , which splits meteorological establishment into two camps in regard to low pressure systems. It would take over ten years of debate to finally come to a consensus on the behaviour of low pressure systems.

- 1841

Elias Loomis the first person known to attempt to devise a theory on frontal zones. This idea did not catch on until expanded upon by the Norwegians in the years following World War I.

Observation networks and weather forecasting

The arrival of the electrical telegraph in 1837 afforded, for the first time, a practical method for gathering quickly information on surface weather conditions from over a wide area. These data could be used to produce maps of the state of the atmosphere for a region near the Earth's surface and to study how these states evolved through time. To make frequent weather forecasts based on these data required a reliable network of observations, but it was not until 1849 that Smithsonian Institute began to establish an observation network across the United States under the leadership of Joseph Henry . Similar observation networks were established in Europe at this time. In 1854, the United Kingdom gorvernment appointed Robert FitzRoy to the new office of Meteorological Statist to the Board of Trade with the role of gathering weather observations at sea. FitzRoy's office become the United Kingdom Meteorological Office in 1854, the first national meteorological service in the world. The first daily weather forecasts made by FitzRoy's Office were published in The Times newspaper in 1860. The following year a system was introduced of hoisting storm warning cones at principal ports when a gale was expected.

Over the next 50 years many countries established national meteorological services: Finnish Meteorological Central Office (1881) was formed from part of Magnetic Observatory of Helsinki University; India Meteorological Department (1889) established following tropical cyclone and monsoon related famines in the previous decades; United States Weather Bureau (1890) was established under the Department of Agriculture; Australian Bureau of Meteorology (1905) established by a Meteorology Act to unify existing state meteorological services.

The Coriolis effect

Understanding the kinematics of how exactly the rotation of the Earth affects airflow was partial at first. Late in the 19th century the full extent of the large scale interaction of pressure gradient force and deflecting force that in the end causes air masses to move along isobars was understood. Early in the 20th century this deflecting force was named the Coriolis effect after Gaspard-Gustave Coriolis, who had published in 1835 on the energy yield of machines with rotating parts, such as waterwheels. In 1856, William Ferrel proposed the existence of a circulation cell in the mid-latitudes with air being deflected by the coriolis force to create the prevailing westerly winds.

Numerical weather prediction

In 1904 the Norwegian scientist Vilhelm Bjerknes first postulated that prognostication of the weather is possible from calculation based upon natural laws.

Early in the 20th century, advances in the understanding of atmospheric physics led to the foundation of modern numerical weather prediction. In 1922, Lewis Fry Richardson published `Weather prediction by numerical process` which described how small terms in the fluid dynamics equations governing atmospheric flow could be neglected to allow numerical solutions to be found. However, the sheer number of calculations required was too large to be completed before the advent of computers.

At this time in Norway a group of meteorologists led by Vilhelm Bjerknes developed the model that explains the generation, intensification and ultimate decay (the life cycle) of mid-latitude cyclones, introducing the idea of fronts, that is, sharply defined boundaries between air masses. The group included Carl-Gustaf Rossby (who was the first to explain the large scale atmospheric flow in terms of fluid dynamics), Tor Bergeron (who first determined the mechanism by which rain forms) and Jacob Bjerknes.

Starting in the 1950s, numerical experiments with computers became feasible. The first weather forecasts derived this way used barotropic (that means, single-vertical-level) models, and could successfully predict the large-scale movement of midlatitude Rossby waves, that is, the pattern of atmospheric lows and highs.

In the 1960s, the chaotic nature of the atmosphere was first observed and understood by Edward Lorenz, founding the field of chaos theory. These advances have led to the current use of ensemble forecasting in most major forecasting centers, to take into account uncertainty arising due to the chaotic nature of the atmosphere.

Satellite observation

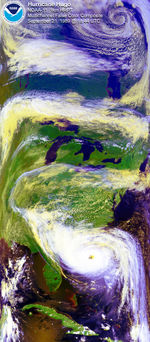

In 1960, the launch of TIROS-1, the first successful weather satellite marked the beginning of the age where weather information is available globally. Weather satellites along with more general-purpose Earth-observing satellites circling the earth at various altitudes have become an indispensable tool for studying a wide range of phenomena from forest fires to El Niño.

In recent years, climate models have been developed that feature a resolution comparable to older weather prediction models. These climate models are used to investigate long-term climate shifts, such as what effects might be caused by human emission of greenhouse gases.

Weather forecasting

Although meteorologists now rely heavily on computer models (numerical weather prediction), it is still relatively common to use techniques and conceptual models that were developed before computers were powerful enough to make predictions accurately or efficiently (generally speaking, prior to around 1980). Many of these methods are used to determine how much skill a forecaster has added to the forecast (for example, how much better than persistence or climatology did the forecast do?). Similarly, they could also be used to determine how much skill the industry as a whole has gained with emerging technologies and techniques.

- Persistence method

The persistence method assumes that conditions will not change. Often summarised as "Tomorrow equals today". This method works best over short periods of time in stagnant weather regimes.

- Extrapolation method

This assumes that the systems in the atmosphere propogate at similar speeds than seen in the past at some distance into the future. This method works best over short periods of time, and works best if you take diurnal changes in the pressure and precipitation patterns into account.

- Numerical forecasting method

The numerical weather prediction or NWP method uses computers to take into account a large number of variables and creates a computer model of the atmosphere. This is most successful when used with the methods below, and when model biases and relative skill are taken into account. In general, the ECMWF model outperforms the NCEP ensemble mean, which outperforms the UKMET/GFS model after 72 hours, which outperform in the NAM model at most time frames. This performance changes when tropical cyclones are taken into account, as the ECMWF/model ensemble methods/model consensus/GFS/UKMET/NOGAPS/ all perform exceedingly well, with the NAM and Canadian GEM exhibiting lower accuracy.

- Consensus/ensemble methods of forecasting

Statistically, it is difficult to beat the mean solution, and the consensus and ensemble methods of forecasting take advantage of the situation by only favoring models that have the greatest support with their ensemble means or other pieces of global model guidance. A local Hydrometeorological Prediction Centre study showed that using this method alone verifies 50-55% of the time.

- Trends method

The trends method involves determining the change in fronts and high and low pressure centers in the model runs over various lengths of time. If the trend is seen over a long enough time frame (24 hours or so), it is more meaningful. The forecast models have been known to overtrend however, so use of this method verifies 55-60% the time, more so in the surface pattern than aloft.

- Climatology/Analog method

The 'climatology or analog method involves using historical weather data collected over long periods of time (years) to predict conditions on a given date. A variation on this theme is the use of teleconnections, which rely upon the date and the expected position of other positive or negative 500 hPa height anomalies to give someone an impression of what the overall pattern would look like with this anomaly in place, and is of more significant help than a model trend since it verifies roughly 75 percent of the time, when used properly and with a stable anomaly centre. Another variation is the use of standard deviations from climatology in various meteorological fields. Once the pattern deviates more than 4-5 sigmas from climatology, it becomes an improbable solution.

Meteorology and climatology

With the development of powerful new supercomputers like the Earth Simulator in Japan, mathematical modeling of the atmosphere can reach unprecedented accuracy. This is not only due to the enhanced spatial and temporal resolution of the grids employed, but also because these more powerful machines can model the Earth as an integrated climate system, where atmosphere, ocean, vegetation, and man-made influences depend on each other realistically. The goal in global meteorological modeling can be termed Earth System Modeling, with a growing number of models of various processes coupled to each other. Predictions for global effects like Global Warming and El Niño are expected to benefit substantially from these advancements.

Regional models are attracting more interest as the resolution of global models increases. With regional weather disasters such as the Elbe flooding in 2002 and the European heat wave in 2003, decision makers expect from these models accurate assessments about the possible increase of these natural hazards in a specific region. Countermeasures such as dikes or intentional flooding might be effective in preventing or at least attenuating natural hazards.

For models at all scales, increased model resolution means less reliance on parameterizations, which are empirically derived expressions for processes that cannot be resolved on the model grid. For example, in mesoscale models individual clouds can now be resolved, removing the need for formulations that average over a grid box. In global modeling, atmospheric waves such as gravity waves with short temporal and spatial scales can be represented without resorting to often overly simplified parameterizations.