Synthesizer

2007 Schools Wikipedia Selection. Related subjects: Musical Instruments

A synthesizer (or synthesiser) is an electronic musical instrument designed to produce electronically generated sound, using techniques such as additive, subtractive, FM, physical modelling synthesis, or phase distortion.

Synthesizers create sounds through direct manipulation of electrical voltages (as in analog synthesizers), mathematical manipulation of discrete values using computers (as in software synthesizers), or by a combination of both methods. In the final stage of the synthesizer, electrical voltages generated by the synthesizer cause vibrations in the diaphragms of loudspeakers, headphones, etc. This synthesized sound is contrasted with recording of natural sound, where the mechanical energy of a sound wave is transformed into a signal which will then be converted back to mechanical energy on playback (though sampling synthesizers significantly blur this distinction).

Synthesizers typically have a keyboard which provides the human interface to the instrument and are often thought of as keyboard instruments. However, a synthesizer's human interface does not necessarily have to be a keyboard, nor does a synthesizer strictly need to be playable by a human. Different fingerboard synthesizer or ribbon controlled synthesizers have also been developed. (See sound module.)

The term "speech synthesizer" is also used in electronic speech processing, often in connection with vocoders.

Sound basics

When natural tonal instruments' sounds are analyzed in the frequency domain, the spectra of tonal instruments exhibit amplitude peaks at the harmonics. These harmonics' frequencies are primarily located close to the integer multiples of the tone's fundamental frequency.

Percussives and rasps usually lack harmonics, and exhibit spectra that are comprised mainly of noise shaped by the resonant frequencies of the structures that produce the sounds. The resonant properties of the instruments (the spectral peaks of which are also referred to as formants) also shape the spectra of string, wind, voice and other natural instruments.

In most conventional synthesizers, for purposes of resynthesis, recordings of real instruments can be thought to be composed of several components.

These component sounds represent the acoustic responses of different parts of the instrument, the sounds produced by the instrument during different parts of a performance, or the behaviour of the instrument under different playing conditions (pitch, intensity of playing, fingering, etc.) The distinctive timbre, intonation and attack of a real instrument can therefore be created by mixing together these components in such a way as resembles the natural behaviour of the real instrument. Nomenclature varies by synthesizer methodology and manufacturer, but the components are often referred to as oscillators or partials. A higher fidelity reproduction of a natural instrument can typically be achieved using more oscillators, but increased computational power and human programming is required, and most synthesizers use between one and four oscillators by default.

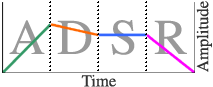

One of the most important parts of any sound is its amplitude envelope. This envelope determines whether the sound is percussive, like a snare drum, or persistent, like a violin string. Most often, this shaping of the sound's amplitude profile is realized with an " ADSR" (Attack Decay Sustain Release) envelope model applied to control oscillator volumes. Apart from Sustain, each of these stages is modeled by a change in volume (typically exponential).

- Attack time is the time taken for initial run-up of the sound level from nil to 100%.

- Decay time is the time taken for the subsequent run down from 100% to the designated Sustain level.

- Sustain level, the third stage, is the steady volume produced when a key is held down.

- Release time is the time taken for the sound to decay from the Sustain level to nil when the key is released. If a key is released during the Attack or Decay stage, the Sustain phase is usually skipped. Similarly, a Sustain level of zero will produce a more-or-less piano-like (or percussive) envelope, with no continuous steady level, even when a key is held. Exponential rates are commonly used because they closely model real physical vibrations, which usually rise or decay exponentially.

Although the oscillations in real instruments also change frequency, most instruments can be modeled well without this refinement. This refinement is necessary to generate a vibrato.

Overview of popular synthesis methods

Subtractive synthesizers use a simple acoustic model that assumes an instrument can be approximated by a simple signal generator (producing sawtooth waves, square waves, etc...) followed by a filter which represents the frequency-dependent losses and resonances in the instrument body. For reasons of simplicity and economy, these filters are typically low-order lowpass filters. The combination of simple modulation routings (such as pulse width modulation and oscillator sync), along with the physically unrealistic lowpass filters, is responsible for the "classic synthesizer" sound commonly associated with "analog synthesis" and often mistakenly used when referring to software synthesizers using subtractive synthesis. Although physical modeling synthesis, synthesis wherein the sound is generated according to the physics of the instrument, has superseded subtractive synthesis for accurately reproducing natural instrument timbres, the subtractive synthesis paradigm is still ubiquitous in synthesizers with most modern designs still offering low-order lowpass or bandpass filters following the oscillator stage.

One of the newest systems to evolve inside music synthesis is physical modelling. This involves taking up models of components of musical objects and creating systems which define action, filters, envelopes and other parameters over time. The definition of such instruments is virtually limitless, as one can combine any given models available with any amount of sources of modulation in terms of pitch, frequency and contour. For example, the model of a violin with characteristics of a pedal steel guitar and perhaps the action of piano hammer ... physical modelling on computers gets better and faster with higher processing ..

One of the easiest synthesis systems is to record a real instrument as a digitized waveform, and then play back its recordings at different speeds to produce different tones. This is the technique used in "sampling". Most samplers designate a part of the sample for each component of the ADSR envelope, and then repeat that section while changing the volume for that segment of the envelope. This lets the sampler have a persuasively different envelope using the same note.. See also: Sample-based synthesis.

Synthesizer basics

There are two major kinds of synthesizers, analog and digital.

There are also many different kinds of synthesis methods, each applicable to both analog and digital synthesizers. These techniques tend to be mathematically related, especially frequency modulation and phase modulation.

- Subtractive synthesis

- Additive synthesis

- Granular synthesis

- Wavetable synthesis

- Frequency modulation synthesis

- Phase distortion synthesis

- Physical modelling synthesis

- Sampling

- Subharmonic synthesis

The start of the analogue synthesizer era

The first electric musical synthesizer was invented in 1876 by Elisha Gray , who was also an independent inventor of the telephone. The "Musical Telegraph" was a chance by-product of his telephone technology.

Gray accidentally discovered that he could control sound from a self vibrating electromagnetic circuit and in doing so invented a basic single note oscillator. The Musical Telegraph used steel reeds whose oscillations were created and transmitted, over a telephone line, by electromagnets. Gray also built a simple loudspeaker device in later models consisting of a vibrating diaphragm in a magnetic field to make the oscillator audible.

Other early synthesizers used technology derived from electronic analog computers, laboratory test equipment, and early electronic musical instruments. Ivor Darreg created his microtonal 'Electronic Keyboard Oboe' in 1937. Another one of the early synthesizers was the ANS synthesizer, a machine that was constructed by the Russian scientist Evgeny Murzin from 1937 to 1957. Only one copy of ANS was built, and it is currently kept at the Lomonosov University in Moscow. In the 1950s, RCA produced experimental devices to synthesize both voice and music. The giant Mark II Music Synthesizer, housed at the Columbia-Princeton Electronic Music Centre in New York City in 1958, was only capable of producing music once it had been completely programmed. The vacuum tube system had to be manually patched to create each new type of sound. It used a paper tape sequencer punched with holes that controlled pitch sources and filters, similar to a mechanical player piano but able to generate a wide variety of sounds.

In 1958 Daphne Oram at the BBC Radiophonic Workshop produced a novel synthesizer using her " Oramics" technique, driven by drawings on a 35mm film strip. This was used for a number of years at the BBC. Hugh Le Caine, John Hanert, Raymond Scott, Percy Grainger (with Burnett Cross), and others built a variety of automated electronic-music controllers during the late 1940s and 1950s.

By the 1960s, synthesizers were developed that could be played in real time but were confined to studios because of their size. These synthesizers were usually configured using a modular design, with standalone signal sources and processors being connected with patch cords or by other means, and all controlled by a common controlling device.

Early synthesizers were often experimental special-built devices, usually based on the concept of modularity. Don Buchla, Hugh Le Caine, Raymond Scott and Paul Ketoff were among the first to build such instruments, in the late 1950s and early 1960s. Only Buchla later produced a commercial version.

Robert Moog, who had been a student of Peter Mauzey, one of the engineers of the RCA Mark II, created a revolutionary synthesizer that could actually be used by pop musicians. Moog designed the circuits used in his synthesizer while he was at Columbia-Princeton. The Moog synthesizer was first displayed at the Audio Engineering Society convention in 1964. Like the RCA Mark II, it required a lot of experience to set up the machine for a new sound, but it was smaller and more intuitive. Less like a machine and more like a musical instrument, the Moog synthesizer was at first a curiosity, but by 1968 had caused a sensation.

Micky Dolenz of The Monkees bought one of the first three Moog synthesizers and the first commercial release to feature a Moog synthesizer was The Monkees' fourth album, Pisces, Aquarius, Capricorn & Jones Ltd., in 1967, which also became the first album featuring a synthesizer to hit #1 on the charts. Also among the first music performed on this synthesizer was the million-selling 1968 album Switched-On Bach by Wendy Carlos. Switched-On Bach was one of the most popular classical-music recordings ever made. During the late 1960s, hundreds of other popular recordings used Moog synthesizer sounds. The Moog synthesizer even spawned a subculture of record producers who made novelty "Moog" recordings, depending on the odd new sounds made by their synthesizers (which were not always Moog units) to draw attention and sales.

Moog also established standards for control interfacing, with a logarithmic 1-volt-per-octave pitch control and a separate pulse triggering signal. This standardization allowed synthesizers from different manufacturers to operate together. Pitch control is usually performed either with an organ-style keyboard or a music sequencer, which produces a series of control voltages over a fixed time period and allows some automation of music production.

Other early commercial synthesizer manufacturers included ARP, who also started with modular synthesizers before producing all-in-one instruments, and British firm EMS.

In 1970, Moog designed an innovative synthesizer with a built-in keyboard and without modular design--the analog circuits were retained, but made interconnectable with switches in a simplified arrangement called "normalization". Though less flexible than a modular design, it made the instrument more portable and easier to use. This first prepatched synthesizer, the Minimoog, became very popular, with over 12,000 units sold. The Minimoog also influenced the design of nearly all subsequent synthesizers.

In the 1970s miniaturized solid-state components allowed synthesizers to become self-contained, portable instruments. They began to be used in live performances. Soon, electronic synthesizers had become a standard part of the popular-music repertoire, with Chicory Tip's "Son of my Father" as the first #1 hit to feature a synthesizer.

The first movie to make use of synthesized music was the James Bond film "On Her Majesty's Secret Service", in 1969. From that point on, a large number of movies were made with synthesized music. A few movies, like 1982's John Carpenter's "The Thing", used all synthesized music in their musical scores.

Homemade synthesizers

During the late 1970s and early 1980s, it was relatively easy to build one's own synthesizer. Designs were published in hobby electronics magazines (notably the Formant modular synth, an impressive diy clone of the Moog system, published by Elektur) and complete kits were supplied by companies such as Paia in the US, and Maplin Electronics in the UK (although often these designs were actually rebranded versions of synths originally built by hobbyists, for example, the Maplin 5600 was a creation of the Australian scientist Trevor Marshall).

Electronic organs vs. synthesizers

All organs (including acoustic) are based on the principle of additive or Fourier Synthesis: Several sine tones are mixed to form a more complex waveform. In the original Hammond organ, built in 1935, these sine waves were generated using revolving tone wheels which induced a current in an electromagnetic pick-up. For every harmonic, there had to be a separate tonewheel. In more modern electronic organs, electronic oscillators serve to produce the sine waves. Organs tend to use fairly simple "formant" filters to effect changes to the oscillator tone--automation and modulation tend to be limited to simple vibrato.

Most analog synthesizers produce their sound using subtractive synthesis. In this method, a waveform rich in overtones, usually a sawtooth or pulse wave, is produced by an oscillator. The signal is then passed through filters, which preferentially remove some overtones to obtain a sound which may be an imitation of an acoustical instrument, or may be a unique tonality not existing in acoustical form. An ADSR envelope generator then controls a VCA (voltage controlled amplifier) to give the sound a loudness contour.

Other circuits, such as waveshapers and ring modulators, can change the tonality in non-harmonic ways or create distortion effects which are often not found in natural sound sources. In spite of the popularity of modern digital and software-based synthesizers, the purely analog modular synthesizer still has its proponents, with a number of manufacturers producing modules little different from Moog's 1964 circuit designs, as well as many newer variations like the Moogalicious 900, invented in 1998.

Microprocessor controlled and polyphonic analog synthesizers

Early analog synthesizers were always monophonic, producing only one tone at a time. A few, such as the Moog Sonic Six, ARP Odyssey and EML 101, were capable of producing two different pitches at a time when two keys were pressed. Polyphony (multiple simultaneous tones, which enables chords), was only obtainable with electronic organ designs at first. Popular electronic keyboards combining organ circuits with synthesizer processing included the ARP Omni and Moog's Polymoog and Opus 3.

By 1976, the first true music synthesizers to offer polyphony had begun to appear, most notably in the form of Moog's Polymoog, the Yamaha CS-80 and the Oberheim Four-Voice. These early instruments were very complex, heavy, and costly. Another feature that began to appear was the recording of knob settings in a digital memory, allowing the changing of sounds quickly.

When microprocessors first appeared on the scene in the early 1970s, they were expensive and difficult to apply.

The first practical polyphonic synth, and the first to use a microprocessor as a controller, was the Sequential Circuits Prophet-5 introduced in 1978. For the first time, musicians had a practical polyphonic synthesizer that allowed all knob settings to be saved in computer memory and recalled by pushing a button. The Prophet-5 was also physically compact and lightweight, unlike its predecessors. This basic design paradigm became a standard among synthesizer manufacturers, slowly pushing out the more complex (and more difficult to use) modular design.

One of the first real-time polyphonic digital music synthesizers was the Coupland Digital Music Synthesizer. It was much more portable than a piano but never reached commercial production.

MIDI control

Synthesizers became easier to integrate and synchronize with other electronic instruments and controllers with the invention in 1983 of MIDI, a time-coded serial interface cable. MIDI interfaces are now almost ubiquitous on music equipment, and commonly available on personal computers (PCs).

The so-called General MIDI (GM) software standard was devised in 1991 to serve as a consistent way of describing a set of over 200 tones (including percussion) available to a PC for playback of musical scores. For the first time, a given MIDI preset would consistently produce an oboe or guitar sound (etc.) on any GM-conforming device. The file format .mid was also established and became a popular standard for exchange of music scores between computers.

OSC, OpenSound Control, is a proposed replacement for MIDI which was designed for networking. In contrast with MIDI, OSC is fast enough to allow thousands of synthesizers or computers to share music performance data over the internet in realtime.

FM synthesis

FM Synthesis is when one oscillator is used to modulate another oscillator. This oscillator can then be used to modulate another oscillator or a parameter of the synth or 'patch' such as rate, depth, etc. of LFOs (Low Frequency Oscillators). These usually control parameters, but oscillators can modulate the LFOs do give a more complex sound. Oscillators can in turn modulate themselves and produce White Noise. John Chowning of Stanford University is generally considered to be the first researcher to conceive of producing musical sounds by causing one oscillator to modulate the pitch of another. This is called FM, or frequency modulation, synthesis. Chowning's early FM experiments were done with software on a mainframe computer.

Most FM synthesizers use sine-wave oscillators (called operators) which, in order for their fundamental frequency to be sufficiently stable, are normally generated digitally (several years after Yamaha popularized this field of synthesis, they were outfitted with the ability to generate wavforms other than a sine wave). Each operator's audio output may be fed to the input of another operator, via an ADSR or other envelope controller. The first operator modulates the pitch of the second operator, in ways that can produce complex waveforms. FM synthesis is fundamentally a type of additive synthesis and the filters used in subtractive synthesizers were typically not used in FM synthesizers until the mid- 1990s. By cascading operators and programming their envelopes appropriately, some subtractive synthesis effects can be simulated, though the sound of a resonant analog filter is almost impossible to achieve. FM is well-suited for making sounds that subtractive synthesizers have difficulty producing, particularly non-harmonic sounds, such as bell timbres.

Chowning's patent covering FM sound synthesis was licensed to giant Japanese manufacturer Yamaha, and made millions for Stanford during the 1980s. Yamaha's first FM synthesizers, the GS-1 and GS-2, were costly and heavy. Keyboardist Brent Mydland of the Grateful Dead used a GS-2 extensively in the 1980s. They soon followed the GS series with a pair of smaller, preset versions - the CE20 and CE25 Combo Ensembles - which were targeted primarily at the home organ market and featured four-octave keyboards. Their third version, the DX-7 ( 1983), was about the same size and weight as the Prophet-5, was reasonably priced, and depended on custom digital integrated circuits to produce FM tonalities. The DX-7 was a smash hit and can be heard on many recordings from the mid-1980s. Yamaha later licensed its FM technology to other manufacturers. By the time the Stanford patent ran out, almost every personal computer in the world contained an audio input-output system with a built-in 4-operator FM digital synthesizer -- a fact most PC users are not aware of.

The GS1 and GS2 had their small memory strips "programmed" by a hardware-based machine that existed only in Hamamatsu (Yamaha Japan headquarters) and Buena Park (Yamaha's U.S. headquarters). It had four 7" monochrome video monitors, each displaying the parameters of one of the four operators within the GS1/2. At that time a single "operator" was a 14"-square circuit board -- this was of course long before Yamaha condensed the FM circuitry to a single ASIC. Interestingly, what became the DX7's 4-stage ADSR at that time actually had many break points....about 75 (which proved quite ineffective in modifying sounds, hence the subsequent regress to the analog-synth type ADSR envelope generators).

During the time period from 1981-1984, Yamaha built a recording studio on Los Feliz Boulevard in Los Angeles dubbed the "Yamaha R&D Studio". Besides operating as a commercial recording studio facility, it served as a test area for new musical instrument products sold by what then was called the "Combo" division of Yamaha.

The Japanese engineers in Hamamatsu failed to create more than a handful of pleasing sounds for the GS1 with the 4-monitor programming machine, although one of them was used on the recording of "Africa" by Toto. At one point, Mr. John Chowning was invited to try to assist in creating new sounds with FM Synthesis. He came to the Yamaha R&D Studio, and spent a long time trying to make the FM theory result in a useful musical sound in practice. He gave up by the end of the week.

Thereafter, a select group of prominent studio synthesists was hired by Yamaha to try to create the voice library for the GS1 (with that same programming tool). They included Gary Leuenberger (who at that time owned an acoustic piano outlet in San Francisco), and Bo Tomlyn (who later founded Key Clique, a third-party DX7 software manufacturer).

Between Gary and Bo (and a third programmer hired in the United Kingdom named David Bristow), they created the bulk of the voices for the GS1 and GS2 that really caught the attention of both musicians and musical instrument dealers in the Yamaha channel, through both NAMM (National Association of Music Merchants) demonstrations and in-store demonstrations. Yamaha reports indicated that only 16 GS-1's were ever produced, and they were all either showcase pieces or donated to Yamaha-sponsored artists, which included (in the U.S.) Stevie Wonder, Toto, Herbie Hancock, and Chick Corea. Despite the fact that it wasn't actually sold (in the U.S.), the GS-1 bore a retail price of about $16,000, and the GS-2 was priced around $8,000.

The CE20 and CE25 "combo ensembles" were sold in the home piano/organ channel in the U.S., but they were accepted to a limited extent in the "professional" music scene. Their sounds were programmed in Japan by some of the engineering staff members who had been working on the GS1 and GS2.

The hardware-based FM "programmer" for the CE20/25 was a rack of breadboard electronics about the size of a telephone booth. The first DX7 print brochure distributed around the world included a picture of that programmer.

At one time, a young Yamaha engineer was assigned the odious task of listening to real instrument recordings, and trying to emulate them with that crude FM synthesis programmer for the CE20/25's EPROM's. That particular engineer was supposedly "locked" in a laboratory for an extended period of time, but eventually failed to produce what the U.S. market thought of as good results in terms of viable synthesizer voices.

Despite his difficulties, there were a couple of notable recordings produced in the U.S. utilizing the CE20, including Al Jarreau's "Mornin'".

Despite a lot of internal pressure from product management within the Yamaha International US division, and all that was going on at the time in terms of the adoption of the MIDI standard by many other companies in the industry, it was decided that the CE20 and CE25 did not need MIDI, since they were relegated to the "home" channel.

While all of this was going on, the DX7 development team was working on what would be the most successful Yamaha professional keyboard to date at the Nippon Gakki headquarters in Hamamatsu.

They called in the Yamaha International Corporation product managers from the U.S., and held a series of critical meetings in Hamamatsu to review their design concepts.

The Nippon Gakki engineering team was headed by "Karl" Hirano. At that time, many of the Japanese engineers who interfaced with US product managers adopted "American" nicknames. Hirano-san selected "Karl" because he liked Karl Malden (who at the time, was on the long-running television show, "Streets of San Francisco" with Michael Douglas.)

Key to their design approach during the development stage(1981-82) was that, like the CE20 and CE25, the DX7 should be a "pre-set" synth, with only factory sounds, and no programming capability. Their rationale behind this was the extreme difficulty that the Yamaha team, Bo, Gary, and others had experienced at wielding FM synthesis and the multi-operator algorithms to make good sounds.

Luckily, the American product management staff had their way: to make the DX7 (and the relatively unsuccessful DX9) completely programmable instruments. As a result, the DX7 was an unheralded success, literally hundreds of great sounds were created, and an entire industry surrounding 3rd-party sounds was spawned. Further, as mentioned previously, OEM chipsets in PCs with the FM synthesis engine became standard fare in that industry.

Many of the preset "General MIDI" sounds in Wintel PCs are exact-DNA clones of numerous sounds originally created by Bo, Gary, Dave Bristow, and a handful of other synthesists. Some even retain the same or similar names that were given them during the DX7 era.

When the DX7 was finally introduced in the U.S., Bo Tomlyn, Peter Rochon (from Yamaha Canada) and other Yamaha staff went on the road to show off the product to the North American Yamaha dealer network. Those seminars included what was thought then to be a key element....training the dealers in how to operate and program the DX7. This was a vivid indication that the concern raised in Hamamatsu over the difficulty level of programming the machine had still persisted.

But, demand was so high for the DX7 the first year of introduction that a "grey market" influx of units originally purchased in Akihabara and other electronics outlets in Tokyo and other parts of Japan, quickly developed, and that became a serious concern for Yamaha International Corporation management.

A rumor was propagated by unknown people at Yamaha (or dealers) that the Japanese units would "blow up" upon being plugged into 120V AC outlets in the U.S., and that the sounds were different from the U.S. version. The latter "rumor" was true. The ROM cartridges included in the Japanese version of the DX7 were different from the American release....the U.S. version had many more of the pleasing sounds created by Bo Tomlyn and Gary Leuenberger.

The DX7 exceeded Yamaha's wildest expectations in terms of unit sales; it took many months for production to catch up with demand. The DX9 failed, most prominently because it was a four-operator (vs six in the DX7) FM and had a cassette tape storage system for voice loading/recording.

The rack-mounted TX216 and TX816, although relatively powerful studio instruments at that time, were also poor sellers, due to lack of support and difficult user interface.

After the successful introduction of the DX series, Bo Tomlyn, along with Mike Malizola (the original DX-7 Yamaha product manager) and Chuck Monte (founder of Dyno-My-Piano), founded "Key Clique, Inc.", which sold thousands of ROM cartridges with new FM/DX7 sounds (programmed by Bo) to DX7 owners around the world. Ironically, Key Clique's "Rhodes-electric-piano" voices led to the relative demise of the Fender Rhodes piano, and even the business started by co-founder Monte (Dyno-My-Piano's principal product was a Rhodes modification kit). Later, however, Key Clique's strong dominance in that marketplace was eventually eroded by people "sharing" Tomlyn voice parameter settings over Bulletin Boards on early computers, and many competitors entered the market all at once.

The final outcome was not far afield from what the Yamaha engineers had originally been concerned about....the huge library of sounds that propagated throughout the music industry for the FM instruments were actually created by only a handful of synth programmers. In numerous interviews and case studies conducted by Yamaha product management with both retail store owners and keyboardists, it was discovered that the average DX7 purchaser hardly ever wanted...or needed...to program his or her own synthesizer voices, since it was so difficult, and because there were so many great sounds available "off the shelf".

At the time when the FM Synthesis technology was first licensed from Stanford University, just about everyone in management at both Nippon Gakki and Yamaha International in the U.S. thought that FM would be "long-gone" by the time the license ran out (about 1996). That turned out to be completely untrue - witness the flourishing of the technology in the OPL chipsets in the majority of PCs around the world over the past many years (as mentioned previously in this article).

The list of prominent musical recordings utilizing the DX7 and the myriad of other FM synthesizers that were introduced later is significant, and new compositions utilizing FM are added to the world music library all the time. Software emulation of the DX7 voice library (including many of the Key Clique sounds) exists today in a wide range of both profssional and 'pro-sumer' studio software products.

PCM synthesis

One kind of synthesizer starts with a binary digital recording of an existing sound. This is called a PCM sample, and is replayed at a range of pitches. Sample playback takes the place of the oscillator found in other synthesizers. The sound is (by most) still processed with synthesizer effects such as filters, LFOs, ring modulators and the like. Most music workstations use this method of synthesis. Often, the pitch of the sample isn't changed, but it is simply played back at a faster speed. For example, in order to shift the frequency of a sound one octave higher, it simply needs to be played at double speed. Playing a sample at half speed causes it to be shifted down by one octave, and so on.

By contrast, an instrument which primarily records and plays back samples is called a sampler. If a sample playback instrument neither records samples nor processes samples as a synthesizer, it is a rompler.

Because of the nature of digital sound storage (sound being measured in fractions of time), anti-aliasing and interpolation techniques (among others) have to be involved to get a natural sounding waveform as end result - especially if more than one note is being played, and/or if arbitrary tone intervals are used. The calculations on sample-data needs to be of great precision (for high quality, >32bits, more like 64bits at least) especially if a lot of different parameters are needed to make a specific sound: more than a few parameters, a lot of calculations need to be made, to avoid the rounding errors of the different calculations taking place.

PCM-sound is obtainable even with a 1-bit system, but the sound is terrible with mostly noise, as there are only two levels, on and off. Since the beginning of PCM synthesis (<1970), almost all number of bits from 1 to 32 have been used, but today the most common ones are 16 and 24bits, going towards 32bits as the next jump up in quality.

The physical modeling synthesizer

Physical modeling synthesis is the synthesis of sound by using a set of equations and algorithms to simulate a physical source of sound. When an initial set of parameters is run through the physical simulation, the simulated sound is generated.

Although physical modeling was not a new concept in acoustics and synthesis, it wasn't until the development of the Karplus-Strong algorithm, the subsequent refinement and generalization of the algorithm into digital waveguide synthesis by Julius O. Smith III and others, and the increase in DSP power in the late 1980s that commercial implementations became feasible.

Following the success of Yamaha's licensing of Stanford's FM synthesis patent, Yamaha signed a contract with Stanford University in 1989 to jointly develop digital waveguide synthesis. As such, most patents related to the technology are owned by Stanford or Yamaha. A physical modeling synthesizer was first realized commercially with Yamaha's VL-1, which was released in 1994.

The modern digital synthesizer

Most modern synthesizers are now completely digital, including those which model analog synthesis using digital techniques. Digital synthesizers use digital signal processing (DSP) techniques to make musical sounds. Some digital synthesizers now exist in the form of ' softsynth' software that synthesizes sound using conventional PC hardware. Others use specialized DSP hardware.

Digital synthesizers generate a digital sample, corresponding to a sound pressure, at a given sampling frequency (typically 44100 samples per second). In the most basic case, each digital oscillator is modeled by a counter. For each sample, the counter of each oscillator is advanced by an amount that varies depending on the frequency of the oscillator. For harmonic oscillators, the counter indexes a table containing the oscillator's waveform. For random-noise oscillators, the most significant bits index a table of random numbers. The values indexed by each oscillator's counter are mixed, processed, and then sent to a digital-to-analog converter, followed by an analog amplifier.

To eliminate the difficult multiplication step in the envelope generation and mixing, some synthesizers perform all of the above operations in a logarithmic coding, and add the current ADSR and mix levels to the logarithmic value of the oscillator, to effectively multiply it. To add the values in the last step of mixing, they are converted to linear values.

Software-only synthesis

The earliest digital synthesis was performed by software synthesizers on mainframe computers using methods exactly like those described in digital synthesis, above. Music was coded using punch cards to describe the type of instrument, note and duration. The formants of each timbre were generated as a series of sine waves, converted to fixed-point binary suitable for digital-to-analog converters, and mixed by adding and averaging. The data was written slowly to computer tape and then played back in real time to generate the music.

Today, a variety of software is available to run on modern high-speed personal computers. DSP algorithms are commonplace, and permit the creation of fairly accurate simulations of physical acoustic sources or electronic sound generators (oscillators, filters, VCAs, etc). Some commercial programs offer quite lavish and complex models of classic synthesizers--everything from the Yamaha DX7 to the original Moog modular. Other programs allow the user complete control of all aspects of digital music synthesis, at the cost of greater complexity and difficulty of use.

Virtual Orchestra

A digital musical instrument, or musical network, capable of simulating the sonic and behavioural characteristics of a traditional acoustic orchestra in real time. The instrument often incorporates various synthesis and sound generating techniques. The Virtual Orchestra is demarcated from traditional keyboard-based synthesizers due to its live performance capabilities which include the ability to follow a conductor's tempo and respond to a variety of musical nuances in real time. The instrument's intelligence is achieved through sophisticated decision making algorithms that utilize knowledge and information from relevant areas of specialization including acoustics, psychoacoustics, music history, and music theory, for example.

Commercial synthesizer manufacturers

Notable synthesizer manufacturers past and present include:

- Access Music

- Alesis

- ARP

- Akai

- Buchla and Associates

- Casio

- Clavia

- Doepfer

- Electronic Music Studios (EMS)

- E-mu

- Ensoniq

- Fairlight

- Generalmusic

- Hartmann Music

- Kawai

- Korg

- Kurzweil Music Systems

- Moog Music

- New England Digital (NED)

- Novation

- Oberheim

- PAiA Electronics

- Palm Products GmbH (PPG)

- Realtime Music Solutions (RMS)

- Roland Corporation

- Sequential Circuits

- Technics

- Waldorf Music

- Yamaha

For a more complete list see Category:Synthesizer manufacturers

Classic synthesizer designs

This is intended to be a list of classic instruments which marked a turning point in musical sound or style, potentially worth an article of their own. They are listed with the names of performers or styles associated with them. For more synthesizer models see Category:Synthesizers.

- Alesis Andromeda (A synthesizer with modern digital control of fully analog sound producing circuitry)

- ARP 2600 ( The Who, Stevie Wonder, Weather Report, Edgar Winter, Jean-Michel Jarre, New Order)

- ARP Odyssey ( Ultravox and their former frontman John Foxx, Styx, Herbie Hancock)

- Buchla Music Box ( Morton Subotnick, Suzanne Ciani)

- Casio CZ-101 An early low-cost digital synthesizer ( Vince Clarke)

- Casio VL-1 More famous for it's drum beat than its synthesizer sounds. Trio's Da Da Da.

- Clavia Nord Lead ( God Lives Underwater, Zoot Woman, The Weathermen, Jean Michel Jarre, the first modern analog modelling synthesizer using digital circuitry to emulate analog circuits)

- EMS VCS3 ( Roxy Music, Hawkwind, Pink Floyd, BBC Radiophonic Workshop, Brian Eno)

- E-mu Emulator ( The Residents, Depeche Mode, Deep Purple, Genesis)

- Fairlight CMI ( Jean-Michel Jarre, Jan Hammer, Peter Gabriel, Mike Oldfield, Pet Shop Boys, The Art of Noise, Kate Bush)

- Hartmann Music Neuron ( Hans Zimmer, Peter Gabriel, Guns 'n Roses, Nikos Patrelakis, David Sylvian)

- Korg Karma

- Korg M1 ( Bradley Joseph)

- Korg Triton ( Bradley Joseph, Derek Sherinian}

- Kurzweil K2000 Synthesizer with V.A.S.T system ( Jean Michel Jarre )

- Lyricon First mass-produced wind synthesizer. ( Michael Brecker, Tom Scott, Chuck Greenberg, Wayne Shorter)

- Moog modular synthesizer ( Rush [pedals only], Wendy Carlos, Tomita, Tonto's Expanding Head Band, Emerson, Lake and Palmer, The Beatles, Weezer)

- Moog Taurus ( Rush, Genesis, The Police, U2, The Rentals)

- Minimoog ( Pink Floyd, Rush, Yes, Emerson Lake and Palmer, Stereolab, Devo, Ray Buttigieg, George Duke)

- NED Synclavier ( Michael Jackson, Stevie Wonder, Laurie Anderson, Frank Zappa, Pat Metheny Group)

- Oberheim OB-Xa ( Rush, Prince, Styx, Supertramp, Van Halen)

- PPG Wave ( Rush, Depeche Mode, The Fixx, Thomas Dolby)

- Roland D-50 ( Jean-Michel Jarre, Enya)

- Roland JD-800 ( Bradley Joseph)

- Roland JP-8000

- Roland Jupiter-4 ( a-ha, John Foxx, Duran Duran, The Human League, Simple Minds)

- Roland Jupiter-8 ( a-ha, Rush, Duran Duran, OMD, Huey Lewis & the News)

- Roland MT-32 (de-facto standard in computer game music and effects)

- Roland TB-303 ( Techno, Acid House)

- Sequential Circuits Prophet 5 ( Berlin, Phil Collins, The Cars, Steve Winwood)

- WaveFrame AudioFrame ( Peter Gabriel, Stevie Wonder)

- Yamaha DX7 ( Rush, Steve Reich, Depeche Mode, Zoot Woman, The Cure, Brian Eno, Howard Jones, Nitzer Ebb)

- Yamaha CS-2

- Yamaha SHS-10 One of the first " keytars" from the 1980s ( Showbread (band))